Loan Risk Prediction Model - Smarter Decisions With Data

Challenges Faced & Solutions in Building the Loan Prediction Model

Developing the loan risk prediction model came with a series of technical and analytical challenges, each requiring critical thinking and problem-solving to overcome. Here’s how I tackled them:

1. Challenge: Incomplete & Noisy Data

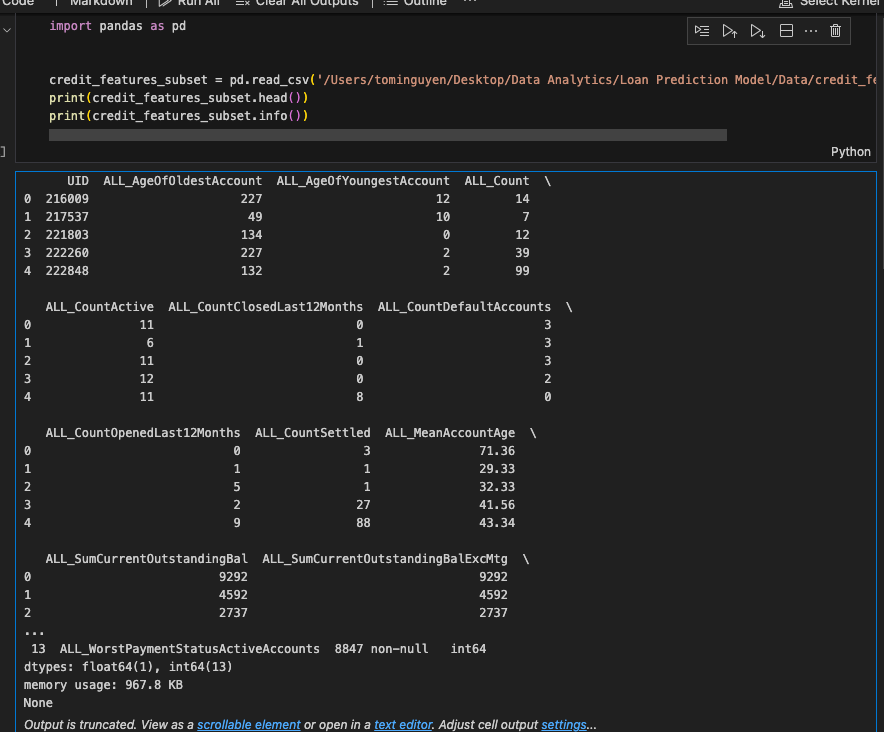

Problem: The loan dataset contained missing values in key fields like income, credit history, and loan amount. Some records had inconsistencies, making it difficult to build a reliable model.

Solution:

• Data Cleaning & Imputation – Used Pandas to detect missing values and applied mean/mode imputation for numerical and categorical variables.

• Outlier Detection – Applied Z-score and IQR methods to identify and handle extreme values.

• Feature Engineering – Created new features from existing ones (e.g., Debt-to-Income Ratio) to improve model accuracy.

Key Takeaway: Ensuring data integrity is critical before building predictive models.

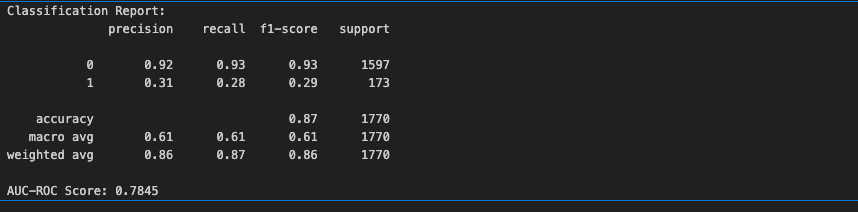

2. Challenge: Imbalanced Classes (Bias in Loan Approvals)

Problem:

The dataset had more approved loans than rejected ones, leading to a bias in which the model predicted approvals more often, reducing its effectiveness in identifying risky applicants.

Solution:

• Resampling Techniques – Used SMOTE (Synthetic Minority Over-sampling) to balance the dataset.

• Custom Cost Function – Adjusted the model’s loss function to penalize misclassified high-risk loans, improving prediction fairness.

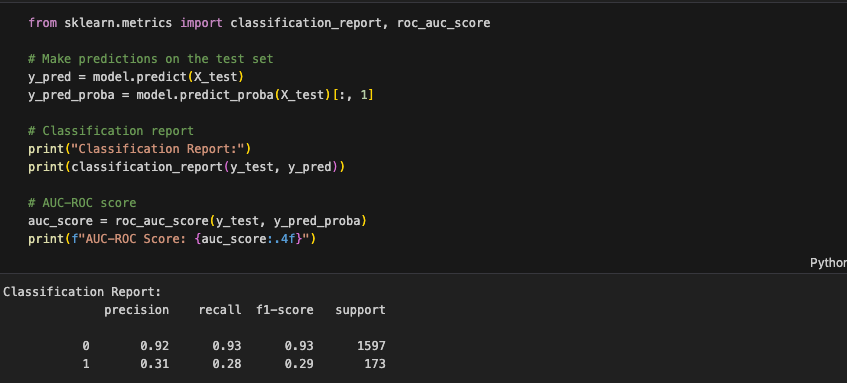

• Precision-Recall Tuning – Focused on recall for rejected loans, ensuring riskier applicants were flagged correctly.

Key Takeaway: Models must be trained to detect high-risk cases, even in imbalanced datasets.

3. Challenge: Choosing the Right Algorithm

Problem: Initial models (Logistic Regression, Decision Trees) performed poorly, either overfitting or underfitting the data. Finding a balance between accuracy, interpretability, and efficiency was challenging.

Solution:

•

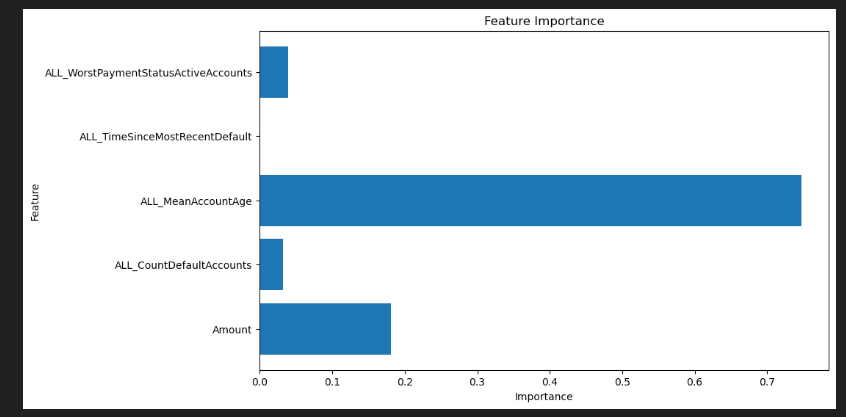

Compared Multiple Models – Tested Random Forest, XGBoost, and Neural Networks, selecting the best based on AUC-ROC scores and F1-score.

• Hyperparameter Tuning – Used GridSearchCV and RandomizedSearchCV to optimize model parameters.

• Feature Selection – Reduced dimensionality by removing redundant variables to improve efficiency.

Key Takeaway: Model selection isn’t just about accuracy—it’s about balancing interpretability, efficiency, and performance.

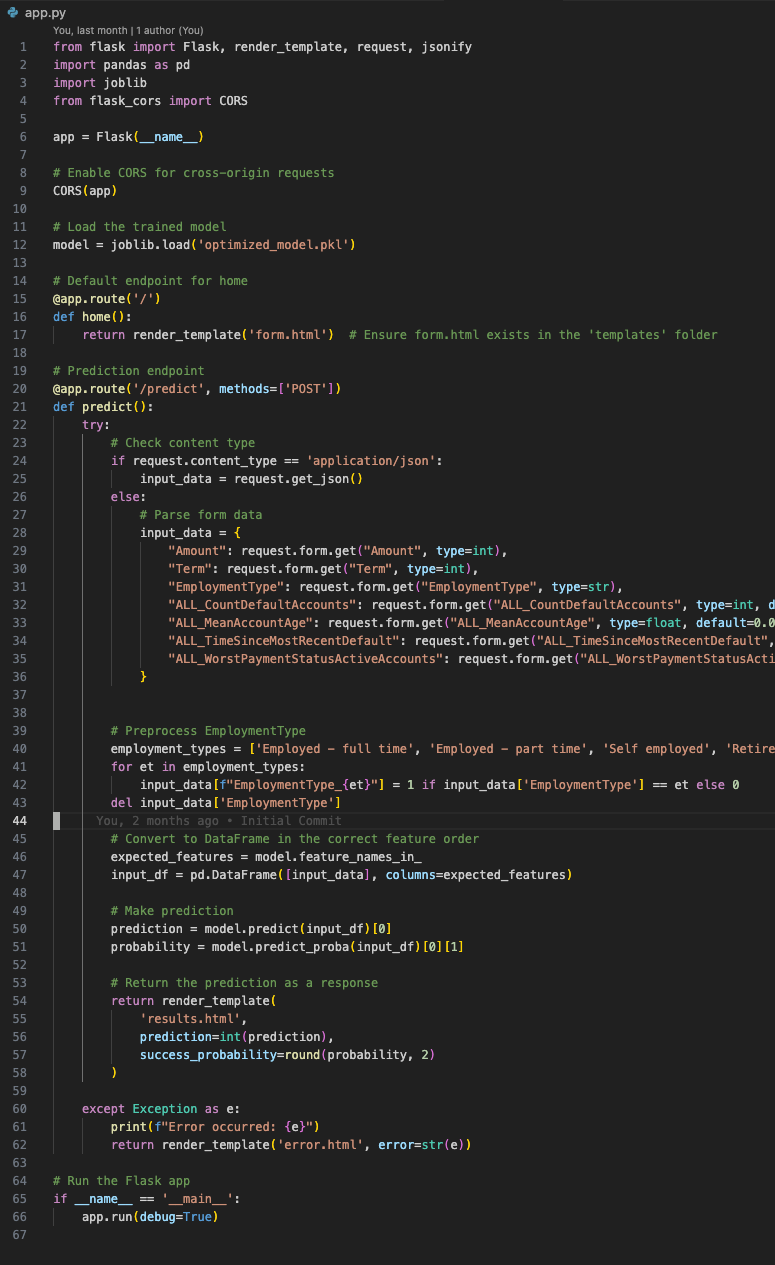

4. Challenge: Deploying the Model as a Web App

Problem: Transitioning from Jupyter Notebook to a fully functional web app required integrating the model with a user-friendly interface, making predictions accessible to non-technical users.

Solution:

• Built a Flask API – Created endpoints for real-time predictions using the trained model.

• Developed an Interactive UI – Used HTML, CSS, JavaScript to design a simple, intuitive front-end.

• Dockerized the Application

– Packaged the model for scalability and deployment on cloud platforms.

Key Takeaway: A model is only valuable if it’s usable and accessible to decision-makers.

Final Reflection: What I Learned

• Data quality is everything – A bad model often means bad data, not a bad algorithm.

• Bias must be addressed – Ensuring fairness in AI-driven lending decisions is critical for ethical AI.

• End-to-End Thinking – From data cleaning to model deployment, every step must be integrated with business needs.

This project strengthened my problem-solving skills, enhanced my ability to build practical machine learning solutions, and demonstrated my ability to bridge technical knowledge with business impact.

Please email me at ~ Tomitjnguyen@gmail.com for any questions and feedback